Arm Launches Lumex AI-Driven Smartphone CPUs with Notable Features

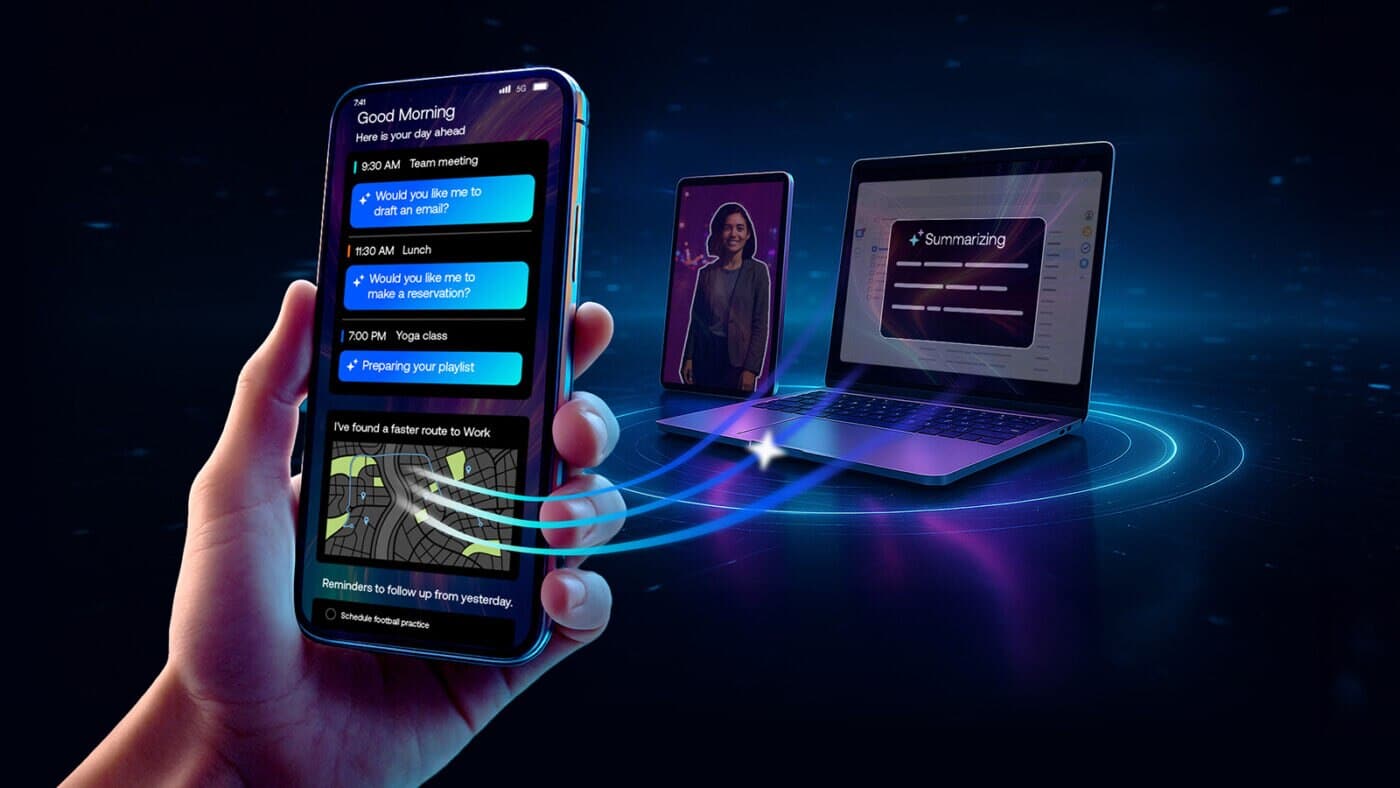

Arm has unveiled its latest Lumex chip designs, which are set to revolutionize on-device artificial intelligence (AI) capabilities in mobile devices. These next-generation chips promise significant enhancements in performance and efficiency, with the potential to deliver up to five times better AI performance compared to previous models. The Lumex architecture is versatile, catering to a range of devices from wearables to high-end smartphones, and aims to accelerate product development cycles for manufacturers.

Innovative Design for Diverse Applications

Arm’s Lumex chips are designed as a “purpose-built compute subsystem” to address the increasing demand for on-device AI experiences. The new Armv9.3 C1 CPU cluster features integrated SME2 units that enhance AI processing capabilities. This architecture includes four distinct CPU types: C1-Ultra for large-model inferencing, C1-Premium for multitasking, C1-Pro for video playback, and C1-Nano for wearables. The advancements in this generation lead to a 30% increase in standard benchmark performance, a 15% acceleration in application speed, and a 12% reduction in power consumption during everyday tasks.

The Mali G1-Ultra GPU complements the CPUs by providing a 20% improvement in AI and machine learning inferencing compared to its predecessor, the Immortalis-G295. Additionally, it enhances gaming experiences with double the ray tracing performance. While Arm offers G1-Premium and G1-Pro options for the GPU, it does not include a G1-Nano variant.

Streamlined Development with Integrated Software

Accompanying the Lumex chips is a comprehensive software stack that is ready for Android 16. This includes SME2-enabled KleidiAI libraries and telemetry tools designed to help developers analyze performance and identify potential bottlenecks. Such resources allow for customization of the Lumex platform to fit specific device models, ensuring optimal performance. Senior Director Kinjal Dave emphasized that mobile computing is entering a transformative era, characterized by how intelligence is built, scaled, and delivered.

Arm’s strategy positions CPUs as the universal AI engine, especially in light of the current lack of standardization among neural processing units (NPUs). While NPUs are gaining traction in PC chips, Arm’s focus remains on enhancing CPU capabilities to meet the evolving demands of mobile AI applications.

Future Implications for Mobile AI

Looking ahead, Arm highlights that many popular Google applications are already optimized for SME2, suggesting that they will be well-prepared to leverage the enhanced on-device AI features offered by the next-generation hardware. This readiness indicates a significant shift in how mobile devices will handle AI tasks, potentially leading to more sophisticated applications and improved user experiences.

As the demand for on-device AI continues to grow, Arm’s Lumex chips are poised to play a crucial role in shaping the future of mobile technology. With their advanced performance metrics and integrated software solutions, these chips could redefine the capabilities of smartphones and other mobile devices, making them more intelligent and efficient than ever before.

Observer Voice is the one stop site for National, International news, Sports, Editor’s Choice, Art/culture contents, Quotes and much more. We also cover historical contents. Historical contents includes World History, Indian History, and what happened today. The website also covers Entertainment across the India and World.