Google Unveils Powerful Ironwood TPU for AI

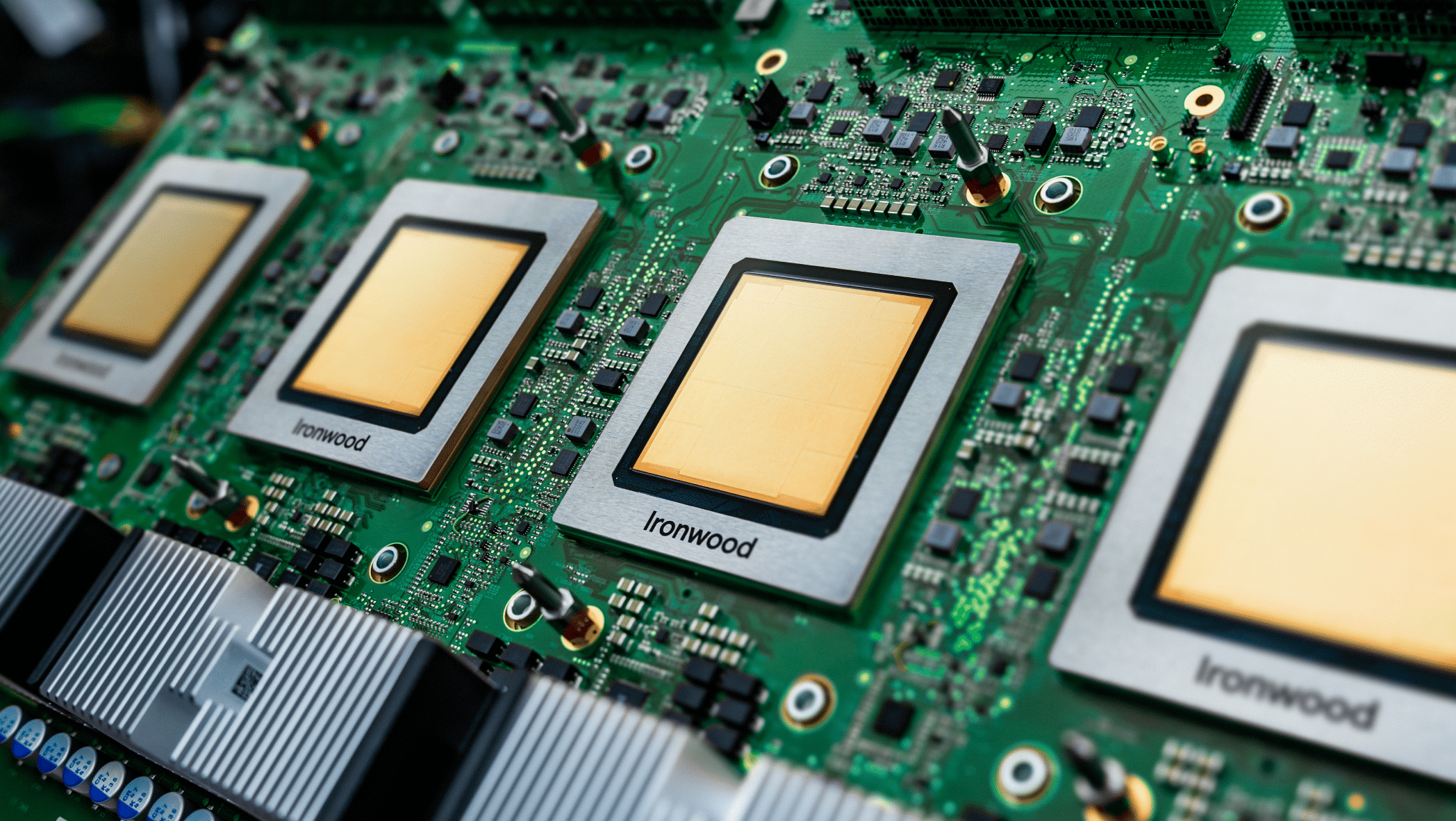

Google has launched its seventh-generation Tensor Processing Unit (TPU), named Ironwood, at the recent Google Cloud Next 25 event. This new chipset is touted as the company’s most powerful and scalable custom artificial intelligence (AI) accelerator to date. Designed specifically for AI inference, Ironwood will soon be available to developers through the Google Cloud platform, marking a significant advancement in AI processing capabilities.

Revolutionizing AI Inference with Ironwood

In a detailed blog post, Google introduced Ironwood as a groundbreaking AI accelerator chipset. The tech giant emphasized that this new TPU will facilitate a shift from traditional response-based AI systems to more proactive models. Ironwood is particularly focused on enhancing dense large language models (LLMs), mixture-of-expert (MoE) models, and agentic AI systems. These systems are designed to retrieve and generate data collaboratively, providing users with insightful answers and information.

TPUs are custom-built chipsets optimized for AI and machine learning (ML) workflows. They excel in high parallel processing, especially for deep learning tasks, while also offering impressive power efficiency. Each Ironwood chip boasts a peak compute capability of 4,614 teraflops (TFLOP), significantly surpassing the performance of its predecessor, Trillium, which was introduced in May 2024. Google plans to offer these chipsets in clusters to maximize processing power for demanding AI applications.

Scalability and Performance Enhancements

The Ironwood TPU can be scaled up to a massive cluster of 9,216 liquid-cooled chips interconnected via an Inter-Chip Interconnect (ICI) network. This architecture is part of Google’s Cloud AI Hypercomputer framework. Developers will have access to Ironwood in two configurations: a 256-chip setup and a full 9,216-chip cluster. At its largest configuration, Ironwood can deliver an astonishing 42.5 Exaflops of computing power, which is more than 24 times the output of the world’s most powerful supercomputer, El Capitan, which achieves 1.7 Exaflops per pod.

Additionally, Ironwood TPUs come with enhanced memory capabilities, featuring 192GB of memory per chip—six times that of the Trillium chipset. The memory bandwidth has also been significantly increased to 7.2 terabits per second (Tbps), further boosting the performance of AI applications.

Availability and Future Prospects

Currently, Ironwood TPUs are not yet available to developers on Google Cloud. As with previous chipsets, Google is expected to first implement Ironwood within its internal systems, including the company’s Gemini models, before rolling out access to external developers. This phased approach allows Google to optimize the performance of Ironwood in real-world applications before making it widely available. The introduction of Ironwood marks a pivotal moment in AI technology, promising to enhance the capabilities of developers and businesses leveraging Google Cloud for their AI needs.

Observer Voice is the one stop site for National, International news, Sports, Editor’s Choice, Art/culture contents, Quotes and much more. We also cover historical contents. Historical contents includes World History, Indian History, and what happened today. The website also covers Entertainment across the India and World.